On WWDC 2017 Apple has presented ML kit which happened to be one of major steps ahead in nowadays mobile software and was created to achieve a significant change in iOS user’s experience. Moreover, Apple not only let users experience their devices in new way but also made it easy for software developers to implement complicated machine learning algorithms in their apps.

So what is machine learning? Today we can hear about it a lot, but do we actually understand what it is? To cut a long story short it’s a way of using complicated statistics and math in order to achieve effect of machine «learning» which is making decisions without actually being to programmed to do that. To tell you the truth, nowadays the while field of artificial intelligence (AI) is not more than just ML. Usually problem solving with ML consists of two stages — training the model with use of dataset (set of specific data compiled by experts) and using that model to solve similar problems.

The hard thing about ML is that it requires a lot of computing power which we usually lack in mobile devices. In fact, nobody will use an app that uses too much battery or network even if it’s useful and gives you some new experience. Moreover, nobody would like to share their data that could be quite personal with the computing cloud.

So how did Apple manage to deal with this challenges?

First of all, it’s all about privacy. ML kit doesn’t send any data to any cloud so that all the computations are executed directly on the device. Secondly, it’s all about optimization. Apple dealt with this as well. Thirdly, you don’t train model on device but only use it for user’s problem solving.

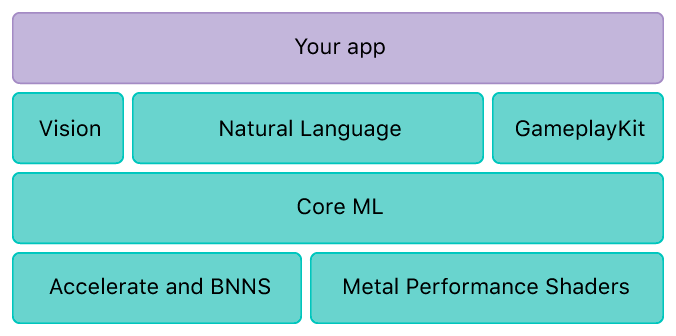

According to Apple documentation Core ML framework is based on metal performance shaders which gives you the ability to get as much performance from device hardware as possible, accelerate which is a library for highly optimized execution of difficult mathematical computations and BNNS (basic neural network subroutines) which gives you some basic tools that are commonly used in ML.

To get a better understanding of BNNS let’s dive a bit deeper and look at specific problem (let it be image recognition) and how it is solved under the hood. Actually the process of recognition is as simple as sending the initial image through the chain of filters which modify it in some way and comparing the output of image with the patterns stored in a model. So BNNS gives the possibility to work with these filters although only three types of them are available so far.

There are three main problems that are easy to solve with ML kit and integrate in your application: vision (pattern recognition), understanding of natural language (e.g. giving the explanation of a phrase) and tuning gaming process according to user’s experience with GameplayKit.

Let’s get a bit closer to the vision framework to get a better understanding how it can be used «in the wild». For this purpose I’ve prepared a small demo app Prizma that lets you recognize some objects around using iPhone camera. It allows you to recognize face, rectangles, barcodes and text labels with their position out-of-box and classify other objects using some custom ML models.

Detecting any of the out-of-box objects is as simple as creating a vision request and specifying handling for that.

> lazy var rectangleDetectionRequest: VNDetectRectanglesRequest ={ let rectDetectRequest = VNDetectRectanglesRequest(completionHandler: self.handleDetectedRectangles) rectDetectRequest.maximumObservations =8 rectDetectRequest.minimumConfidence =0.6 rectDetectRequest.minimumAspectRatio =0.3return rectDetectRequest }()>

In request we can specify the maximum number of objects detected at once, minimal detection confidence (from 0 to 1) required for handler to fire and minimal aspect ratio for rectangle to be recognized. Quite flexible, you see. In this particular app handling detection means drawing it on layer on top of camera view.

> fileprivate func handleDetectedRectangles(request: VNRequest?, error: Error?){if let nsError = error as NSError? { self.presentAlert("Rectangle Detection Error", error: nsError)return} DispatchQueue.main.async { guard let drawLayer = self.cameraView?.layer, let results = request?.results as? [VNRectangleObservation]else{return} self.draw(rectangles: results, onImageWithBounds: drawLayer.bounds) drawLayer.setNeedsDisplay()}} >

Then for each frame captured by camera we simply call the method to execute our requests.

> fileprivate func performVisionRequest(image: CGImage, orientation: CGImagePropertyOrientation){ var requests =[VNImageBasedRequest]()if Settings.provider.facesSelected { requests.append(faceLandmarkRequest); requests.append(faceDetectionRequest)}if Settings.provider.rectsSelected { requests.append(rectangleDetectionRequest)}if Settings.provider.textSelected { requests.append(textDetectionRequest)}if Settings.provider.barcodeSelected { requests.append(barcodeDetectionRequest)} let imageRequestHandler = VNImageRequestHandler(cgImage: image, orientation: orientation, options:[:]) DispatchQueue.global(qos: .userInitiated).async {do{ try imageRequestHandler.perform(requests)} catch let error as NSError{ self.presentAlert("Image Request Failed", error: error)return}}} >

Now you see that using ML kit is declarative and quite straightforward.

Let’s switch to the second part of the app — using custom models. You are free to choose whether to train your custom model or to use someone else’s. On Apple documentation site you can find some basic models. Frankly speaking, they are not quite accurate. You may also surf the net to find some open source models as well. Since the release of Xcode 10.0 it has become even more easy to create your own models. Training a model requires having a dataset and drag&drop it to the Xcode. You can find more info about that here.

To perform classification we have to create request as well specifying a model to use. So far it’s impossible to create or download model and use it on-the-fly, so we have to prepare models we want to use in advance.

> guard let visionModel:VNCoreMLModel = Settings.provider.currentModel.model else{return} let classificationRequest = VNCoreMLRequest(model: visionModel, completionHandler: handleClassification) classificationRequest.imageCropAndScaleOption = .centerCrop do{ try self.visionSequenceHandler.perform([classificationRequest], on: pixelBuffer)} catch { print("Throws: \(error)")}>

While creating request we can specify minimal (maximal) confidence as well as in previous part. Classification result is an array of classification labels and confidences. To handle this we just display this data on the label.

> func updateClassificationLabel(labelString: String){ DispatchQueue.main.async { self.resultLabel?.text = labelString }} func handleClassification(request: VNRequest, error: Error?){ guard let observations = request.results else{ updateClassificationLabel(labelString:"")return} let classifications = observations[0...min(3, (observations.count -1))] .compactMap({ $0 as? VNClassificationObservation}) .filter({ $0.confidence > 0.2}) .map(self.textForClassification) if(classifications.count > 0){ updateClassificationLabel(labelString:"\(classifications.joined(separator: "\n"))")}else{ updateClassificationLabel(labelString:"")}} func textForClassification(classification: VNClassificationObservation)-> String { let pc = Int(classification.confidence *100)return"\(classification.identifier)\nConfidence: \(pc)%"} >

Whole source code of this demo app can be found here.

At our software development company, we can see that even though ML is quite complicated field of computer science Apple with the use of ML kit has given us the ability to easily use it in declarative way letting users experience high performance of their devices and respecting their privacy.