AI has already surpassed humans in many activities requiring thoroughness, accuracy, and patience. Which doesn’t prevent it from stacking up at such elementary things as telling a turtle from a rifle. With the consequences it can have (and sometimes already has: from suspicion of a criminal offense to traffic fatalities), some experts even hesitate whether it is Artificial Intelligence or Artificial Stupidity we should fear more. Why does AI make so weird mistakes and how can it be fixed?

The recent accident in which an Uber driverless car hit a woman sheds light on the main reason why AI gets so helpless in certain situations. And here it is: no matter how smart it is - it thinks differently from us humans. That’s why instead of the only sustainable immediate decision in such a situation - first, do everything in order to prevent the collision - it spent long seconds on identifying what kind of object was ahead. And that’s how it works.

Want a web app that does more?

Let's build a solution that's smart, sleek, and powerful.

Alina

Client Manager

It's a Mad, Mad, Mad, Mad World

When taking a decision, AI relies on inbuilt algorithms and a massive amount of data which it processes in order to come to certain «conclusions». Erik Brynjolfsson and Tom Mitchell mention that to provide AI with a solid ground for proper decision making, we should set well-defined inputs and outputs, clearly define goals and metrics, give short and precise instructions and eliminate long chains of reasoning relying on common sense. That is, create the environment as unambiguous and predictable as possible.

But such ideal conditions are hard to find in the real world. You can’t expect from all road users that they will flawlessly follow traffic rules. Or that all handwritings will be legible. Or that all situations will be clear and unambiguous. The human brain is adjusted to operate in an uncertain, ever-changing environment quite often causing that uncertainty and ambiguity by itself. And to be able to act successfully in this world, AI should learn to think more like a human.

Learning, Human Way

And how do we humans learn to take decisions? Most of the experience we get from the very childhood comes from trial and error. But it would be too thoughtless to rely only on empirical knowledge taking into account the helplessness of human offsprings and the number of dangers the world around poses. That’s why in addition to trial and error, targeted training and instructing takes place from the very first days of a child’s life. And we got to say that nature itself took care of us having empowered with a set of inborn instincts.

Today, scientists are trying to apply what we know about the way humans learn to machine learning. Thus, Open AI has developed an algorithm which makes it possible for AI to learn from its own mistakes in the same way babies do it. Their new technology, called Hindsight Experience Replay (or simply HER), allows AI to review its previous actions when completing a specific task. The process of learning is similar to that of humans including reinforcement. When we master some action, the results rarely immediately correspond to the goals put. But we learn from those poor results, too, and later can use them for achieving other goals. Thus, it can be said that though we haven’t succeeded in our attempts, their result is still positive. For our brain, such learning occurs as a kind of background subconscious process. HER formalizes it for machine learning reframing failures as successes.

Development of Neural Networks

New AI algorithms resemble the development of human neural networks where tiny neurons pass signals to each other thereby creating and accessing our memories about different objects and their properties. Later, these memories are used as a basis for reasoning, anticipation, and decision-making.

In a similar manner, simple computing elements of AI (nods) create stronger or weaker links by repeatedly adjusting the connections as they ingest training data. Over time, the system defines the best connecting patterns and adopts them as defaults forming what we call an automatic reaction when talking about human behavior. Later it can apply obtained knowledge to new situations anticipating the result based on the statistics of previous attempts. The bigger the amount of training data is, the ‘smarter’ your neural network gets and the more precise its predictions are.

Of course, we should understand that it doesn’t go about the absolute resemblance. The human brain contains some 100 bln neurons which form some 1,000 trillion connections. And its very nature is different: it relies on electrochemical rather than digital signal. Unlike the machine ‘brain’, the human brain needs times fewer data to build connections and can apply knowledge to new situations incomparably easier and quicker (something which forms the basis for creativity). So, in the case of computer neural networks, they are but a very rough replica of what’s going on in our heads.

Discover how we created an AI agent for a customs brokerage company in one month and reduced document handling time by 67%.

Sealed Secret

The challenging side of AI self-education is the more human-like it gets, the less understandable and controlled become the processes which take place in its ‘brain’. In this sense, the proverb we are more used to apply to people, “You can’t get into someone’s head”, makes it true for modern self-taught artificial neural networks as well. As it happened with Facebook chatbots which, when left to themselves, quickly developed their own language incomprehensible for humans.

With chatbots, which are rather simple programs, it’s nothing dangerous in fact. Nevertheless, it taught scientists a lesson to be more cautious when dealing with more complex solutions. Not that you are going to meet a Terminator around the corner of your house tomorrow. But just imagine that very self-driving car which takes a decision on whether it is better to turn or to run you over.

‘Bad reasoning’ is the most frequent cause of AI’s foolish mistakes. And what is worst, the machine can’t explain why it decided so making it really hard to find out what, and at what stage, went wrong. Recently, scientists have overcome this challenge by creating software which can debug AI systems by reverse engineering of their learning process. It tests a neural network with a large number of inputs — each ‘neuron’ one by one — and tells it where its responses are wrong so it can correct itself.

Relying on Human Teachers

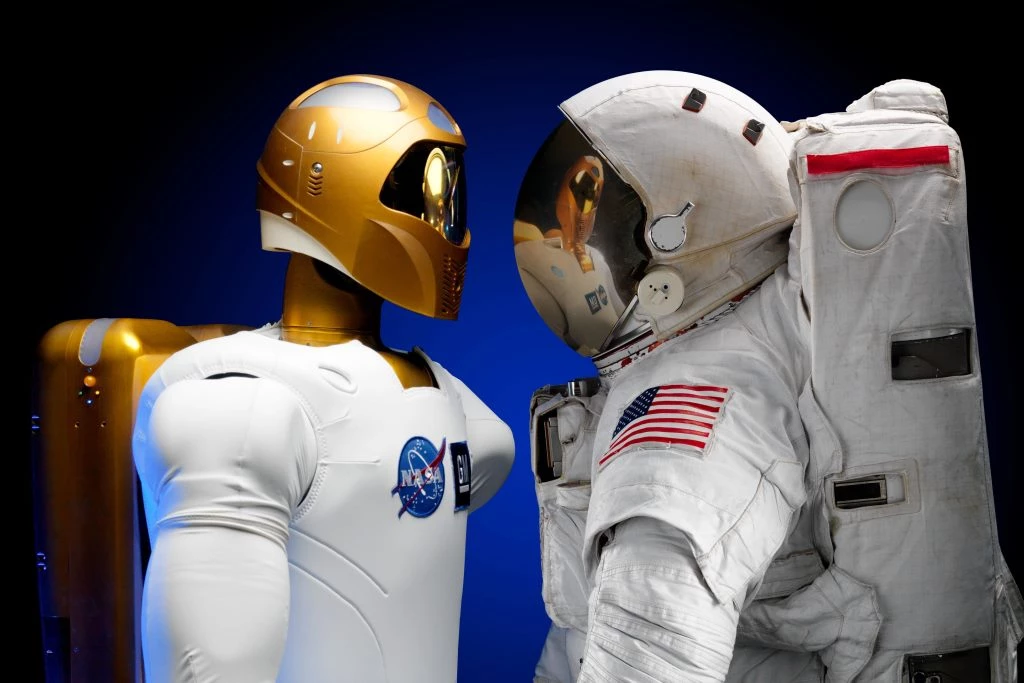

The method of self-instruction with trial and error is least justifiable in risky or ambiguous situations. In such cases, as children benefit from the instructions of adults, AI can also benefit from human teachers. Some steps have already been done in this direction. So, in one of the experiments, AI learned to distinguish human hair on photos (the task which is very difficult for the machine) following the preliminary instructions of the trainers. In another study, AI was taught to play a game of Montezuma’s Revenge following instructions in plain English.

The results of these experiments are really promising, but there are still a lot of obstacles on the way to smooth human-AI communication. Today, AI can understand only simple direct commands, which is far from how people communicate on a daily basis. Nikolaos Mavridis identifies 10 aspects of human-robot interactive communication which are still to be reached, including multiple acts of speech , mixed-initiative dialogues, non-verbal communication, etc.

Another thing is that to explain something, we should clearly understand it ourselves. Which is rarely true about humans. The pattern described as far as in the 1950s by the philosopher and scientist Michael Polanyi indicates that, when making decisions, we only partially rely on the evident, explicit knowledge. Most of the knowledge we engage on an everyday basis is tacit, that is, we are not aware we possess and apply it. We obtain it in the course of interactions with each other and the world around us in a subconscious practical way. That’s why it is difficult to transfer it, especially when it goes about teaching a machine.

Read how Human-AI interaction is fine-tuned on the smart-city scale.

Reasoning VS Gut Instincts

What else distinguishes our mental processes from those of a machine is that yet before the birth to the world, the human brain is not a tabula rasa. We have a set of inborn basic instincts, some kind of core knowledge that helps us to understand objects, actions, numbers, and space — and to develop common sense. In the case of machines, the role of such instincts is played by activation functions.

Should such ‘instincts’ (in the form of innate machinery) be inbuilt in AI? Some scientists insist. The list includes notions of causality, cost-benefit analysis, translational invariance, contextualization, etc. Arguments among the scientists are fierce whether it is appropriate to incorporate such innate algorithms in AI and what consequences it might have. Indeed, it took millions of years for our instincts to evolutionize. Is it possible to compress this lengthy process effectively? One of the ways scientists propose is a backward analysis of what is actually taking place when we are behaving instinctively or intuitively.

That’s what researchers from MIT did to understand the algorithm of intuitive decision-taking when solving complicated problems. They asked a group of cleverest students to find a solution for the optimization of the airline network. The approaches to the problem were then analyzed and encoded in the machine-readable form.

What’s Next?

So far, we have dwelt on the ability of AI to reason and make logical decisions. But logical-mathematical intelligence is but one of the many we leverage on an everyday basis. Psychologists count at least 9 different types of human intelligence, including musical, existential, interpersonal, kinesthetic, etc. Construction of these types of intelligence is especially important for general-purpose AI, yet it is mostly in the domain of the future so far, as well as the ability for AI to take account of context and solve moral dilemmas.

It is hard to predict how long it might take for the AI to evolve to the stage of carbon brain and whether we are going to benefit from the results, but till that time, we should be ready for AI making more weird mistakes. After all, to quote Dostoyevsky’s “Crime and Punishment”, ‘it takes something more than intelligence to act intelligently’ - and artificial intelligence is not an exception.

Read more about Practical business application of AI.

Let’s see together what the future holds. But better, let’s create this future together. Stfalcon invites you for collaboration!